Artificial Intelligence

The Ultimate Guide: Understanding The Psychology of Collective Abandonment

At a planetary scale, a profound cognitive dissonance is starkly evident, revealing a challenging aspect of human psychology. While corporate AI investment reached an astounding $252.3 billion in 2024, with projections for tech giants to spend $364 billion in 2025 on AI infrastructure, the United Nations grapples with a dire "race to bankruptcy," facing $700 million in arrears (UN, 2024). Simultaneously, the annual funding gap required to ensure basic human dignity globally stands at an estimated $4.2 trillion. This disparity highlights a collective abandonment of pressing human needs in favor of abstract technological pursuits.

What psychological mechanisms enable us to pour hundreds of billions into artificial intelligence while 600 million people are projected to live in extreme poverty by 2030? The answer lies deeply embedded in the architecture of human decision-making. Key factors include our inherent proximity bias, the seductive allure of technological solutionism, and the widespread diffusion of responsibility, all exacerbated by a manufactured sense of urgency around AI development. This complex interplay forms the core of the psychology of collective abandonment, allowing societal neglect to persist despite vast resources.

The Immediate vs. The Distant: Overcoming Proximity Bias

Human beings are inherently wired to respond more forcefully to what is immediate, tangible, and directly observable. A sophisticated chatbot instantly answering your queries or a new AI-powered app enhancing your daily convenience feels far more real and impactful than the plight of a child facing hunger on another continent. This phenomenon is known as proximity bias, a deeply ingrained human tendency to prioritize issues or individuals that are geographically or psychologically closer to us, even when distant problems carry significantly greater moral weight or societal importance (Psychology Today, 2024).

AI companies masterfully leverage this bias. They deliver products and services directly to your hand or screen, offering immediate benefits like enhanced efficiency, unparalleled convenience, or the thrill of novelty. The associated costs, however, often remain abstract and out of sight. For instance, the staggering energy consumption of AI--183 terawatt-hours in 2024, projected to reach 426 TWh by 2030--or the 16 to 33 billion gallons of water annually consumed by 2028, are not visible in our daily lives. We don't witness aquifers depleting firsthand, nor do we directly experience the blackouts that Mexican and Irish villages sometimes face due to the immense power demands of new data centers. This invisibility contributes to the psychology of collective abandonment, as we overlook indirect consequences.

In stark contrast, the realities of global poverty are often abstract for those in affluent societies. The daily struggle of a mother forced to choose between feeding her children or buying essential medicine doesn't register in our immediate experience. Schools lacking teachers, clinics without vital medications, or communities without clean water sources remain distant, statistical data points rather than visceral realities. This creates a profound tangibility asymmetry: the benefits of AI feel concrete and personal, while its environmental costs and the suffering from global inaction remain abstract.

For many whose food, water, and shelter are guaranteed, the benefits of the UN's Sustainable Development Goals (SDGs) also feel abstract. Conversely, the profound suffering and long-term costs of failing to achieve these goals remain largely unreal to them. Our brains struggle intensely with this inverted relationship, where psychological salience--what feels real and immediate--often overshadows actual importance. Overcoming this proximity bias is crucial to addressing the psychology of collective abandonment on a global scale.

The Allure of Quick Fixes: Decoding Technological Solutionism

Humans frequently exhibit a preference for elegant, technical solutions to what are fundamentally messy and complex human problems. Psychologists term this tendency technological solutionism. This preference often leads us to seek engineering-based answers rather than confronting uncomfortable truths about human behavior, systemic inequalities, or entrenched power structures. It's a significant driver in the psychology of collective abandonment, as it distracts from deeper issues.

Firstly, technology offers an appealing illusion of control. There's a pervasive fantasy that intricate global challenges, such as climate change or poverty, can be neatly solved through sophisticated engineering advancements. Developing new AI models, for example, often appears more achievable and manageable than the monumental task of ending poverty. This is because AI development can be compartmentalized as a technical challenge, whereas eradicating poverty demands a profound confrontation with wealth distribution, systemic injustice, and uncomfortable societal truths that require fundamental behavioral shifts and power restructuring. This compartmentalization allows us to avoid the messier human elements.

Secondly, this focus on technological fixes can lead to moral licensing. When we invest heavily in AI, particularly when it's framed as a tool to "solve" grand problems--like aiding healthcare diagnoses or improving climate modeling--we can psychologically permission ourselves to overlook or even ignore how those very investments might exacerbate other pressing issues. The narrative often becomes, "We're diligently working on the future," which then implicitly justifies a form of collective abandonment of the present's urgent needs. Executives approving billions for AI infrastructure might genuinely believe they are contributing to progress, even as that same infrastructure drains vital resources like water and electricity from communities that desperately need them for basic human services.

Thirdly, future discounting plays a crucial role. This psychological bias leads us to value near-term gains over long-term consequences. AI promises tangible returns, often within the next financial quarter, creating an immediate sense of urgency and reward. In contrast, the 2030 deadline for the UN's Sustainable Development Goals, while rapidly approaching, can still feel distant. This temporal gap makes AI investments appear urgent and essential, while commitment to the SDGs can seem optional or deferrable. This preference for immediate gratification over future well-being is a core component of how technological solutionism perpetuates the psychology of collective abandonment, allowing us to prioritize short-term technological advancements over foundational human needs.

When Everyone is Responsible: Addressing Diffusion of Responsibility

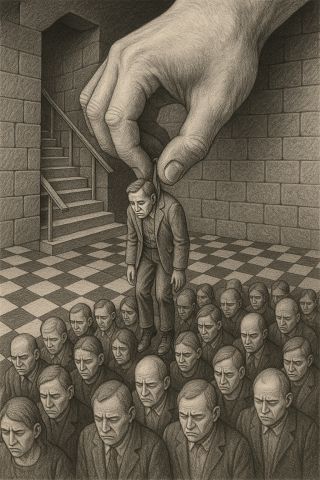

Perhaps one of the most potent psychological mechanisms contributing to collective abandonment is the diffusion of responsibility, essentially the bystander effect scaled to planetary proportions. In situations where responsibility is broadly distributed among many individuals or entities, the paradoxical outcome is that no single person or group feels genuinely accountable. This phenomenon creates a critical barrier to addressing global challenges, as the impetus for action dissipates across the collective.

Consider the mindset of a tech decision-maker allocating billions to AI development. Their choice isn't typically framed as "investing in AI versus ending child hunger." Instead, the decision is often perceived as "investing in AI because competitors are doing it" versus "not investing." The crucial counterfactual--what profound good could be achieved with those billions if directed elsewhere--rarely enters their immediate decision-making framework. Similarly, the responsibility for funding the Sustainable Development Goals is so widely diffused across humanity, governments, and international bodies that it effectively becomes the responsibility of no one specific entity. This dilution of accountability is a hallmark of the psychology of collective abandonment.

This lack of individual accountability is further reinforced by system justification, a psychological tendency to defend and rationalize existing social, economic, and political systems. Statements like "This is simply how markets operate," or "Capital naturally flows to opportunities," are frequently used to explain and defend the status quo. Each of these statements, when examined in isolation, might appear defensible. However, when taken collectively, they construct a formidable psychological fortress that shields the current system from critical moral scrutiny. This entrenched thinking prevents a re-evaluation of how resources are allocated and perpetuates inaction on urgent global issues.

Despite the immense global financial wealth, which reached an astonishing $305 trillion in 2024 (World Bank, 2024), the funds necessary to ensure basic human dignity are not mobilized. The money undoubtedly exists to provide every human with food, water, shelter, healthcare, and education--an estimated $4.2 trillion annually. Yet, the pervasive diffusion of responsibility means that no individual, institution, or nation feels a direct, compelling obligation to mobilize even a fraction of this wealth. This systemic failure underscores how deeply the psychology of collective abandonment is embedded in our global decision-making frameworks, preventing essential resources from reaching those in desperate need.

The FOMO Effect: Why Fear Trumps Empathy in Investment

The current AI investment frenzy vividly illustrates classic bubble psychology, where the fear of missing out (FOMO) overwhelmingly overrides rational assessment and ethical considerations. When AI startups collectively raised an unprecedented $110 billion in 2024--a staggering 62% increase--and markets can plummet by $800 billion in a single day due to news of a cheaper competitor, we are witnessing a profound panic-driven behavior rather than principled investment decisions. This intense competitive pressure is a powerful mechanism contributing to the psychology of collective abandonment, as vital resources are diverted by speculative fear.

FOMO powerfully hijacks our innate social comparison mechanisms. Instead of evaluating investments against absolute measures of genuine value, societal benefit, or long-term sustainability, decisions are primarily made relative to what competitors and peers are doing. The imperative becomes: "If your competitor is investing heavily in AI, then you must do the same." This competitive reflex often takes precedence, regardless of whether the investment genuinely creates new value, addresses critical needs, or merely inflates market valuations based on speculative hype. The drive to keep pace with others overshadows a deeper analysis of impact or ethical implications.

This dynamic creates a perilous trap: the more irrational and speculative the investment trend becomes, the more urgent and unavoidable it feels to participate. In this high-stakes environment, moral considerations--such as the human costs of capital misallocation, the environmental impact of data centers, or the opportunity cost of neglecting global poverty--become increasingly irrelevant. They are sidelined by the intense competitive panic and the perceived necessity to secure a market position. This overwhelming pressure to conform and compete actively suppresses empathy and ethical reasoning.

The result is a form of collective abandonment, where immense financial resources are channeled into a speculative technological race while fundamental human needs remain chronically underfunded. The urgency created by FOMO effectively acts as a moral anesthetic, dulling our collective sensitivity to the profound disparities and ethical dilemmas at play. Breaking free from this cycle requires a deliberate shift from fear-driven competition to value-driven, ethically conscious investment strategies that prioritize human well-being over speculative gains.

Paving the Way Forward: Embracing ProSocial AI

Breaking the entrenched psychological patterns that lead to collective abandonment demands a fundamental restructuring of our decision-making frameworks. The concept of ProSocial AI offers a psychologically essential pathway forward. Instead of merely asking, "What can AI do?" we must pivot to the more profound question: "What should AI do to genuinely enhance human dignity and foster planetary health?" This reframing is critical for shifting our collective priorities.

This new perspective activates entirely different psychological mechanisms. Rather than falling prey to technological solutionism, it actively invokes moral reasoning and ethical considerations. Instead of being limited by proximity bias, it demands proactive perspective-taking, urging us to deeply imagine and understand the experiences of those who bear the costs of technological advancement or global neglect. This reframing counters the passive acceptance of collective abandonment by fostering active empathy. Instead of allowing diffusion of responsibility to paralyze action, ProSocial AI creates direct accountability by explicitly linking AI development outcomes to specific, measurable human well-being and environmental goals.

The idea of hybrid intelligence--a complementary partnership between artificial and natural intelligence--is central to this shift. It acknowledges that truly critical decisions, especially those involving ethical dilemmas, human welfare, and complex societal impacts, require human judgment, empathy, and moral reasoning that AI, by its very nature, cannot replicate. When local communities directly affected by data center deployments are given a genuine voice in siting and operational decisions, proximity bias is effectively inverted, working for moral and equitable outcomes rather than against them. This direct engagement ensures that those most impacted have agency.

Furthermore, when the success of AI development is rigorously evaluated against its contribution to achieving Sustainable Development Goals, rather than solely by quarterly financial returns, future discounting is directly countered by present-focused accountability. This approach forces a longer-term perspective and integrates societal impact into the core metrics of success. This demands a renewed emphasis on human agency amid the rise of AI, ensuring that human decision-making power remains paramount. Every algorithm is ultimately a product of human choices about whose interests matter. Democratizing these choices, particularly by including voices from the Global South who disproportionately bear the costs of climate change and poverty, directly counters the effects of diffusion of responsibility and system justification, fostering a more equitable and responsible future for AI.

Empowering Change: Practical Psychological Leverage Points

Transforming the psychology of collective abandonment requires strategic intervention at several psychological leverage points. These practical approaches can shift ingrained behaviors and decision-making processes, making invisible costs visible and fostering a greater sense of shared responsibility. By understanding these mechanisms, individuals and institutions can drive meaningful change.

Shareholder activism during proxy season offers a powerful avenue to convert diffused responsibility into direct accountability. By actively voting for resolutions that mandate comprehensive AI environmental impact reporting or tie executive compensation to tangible sustainability metrics, shareholders can make the previously invisible costs of AI development--such as energy consumption and water usage--visibly integrated into corporate governance. This directly counters the tangibility asymmetry that often allows abstract costs to be ignored. It forces corporations to acknowledge their broader societal and environmental footprint (Stanford, 2024).

Institutional divestment advocacy is another potent tool. When universities, large pension funds, or influential religious organizations publicly commit to divesting from extractive AI technologies and instead invest in regenerative, ProSocial alternatives, they activate social proof. Humans inherently look to others, especially respected institutions, to determine appropriate behavior and validate new norms. Such public shifts signal a powerful change in ethical standards, encouraging other institutions and individuals to re-evaluate their own investment portfolios and values. This collective action can create a ripple effect, moving capital towards more ethical endeavors.

Crucially, narrative reframing holds immense power. When we highlight that a single ChatGPT conversation consumes water equivalent to a plastic bottle, we transform an abstract environmental cost into a tangible, relatable impact. This concrete example makes the environmental footprint of AI immediately understandable and compelling. Similarly, asking critical questions like "What problems does this AI truly solve versus what problems does it exacerbate?" activates critical thinking, directly challenging the allure of technological solutionism.

Furthermore, reframing the "inevitable AI future" as "choosing AI's role in a human-centric future" is vital. This shift in language restores human agency, countering the learned helplessness that can arise from technological determinism. It reminds us that the trajectory of AI is not predetermined but is, in fact, a series of conscious human choices. These psychological leverage points collectively empower us to challenge the status quo, fostering a future where human dignity and planetary health are prioritized over abstract technological pursuits and the insidious psychology of collective abandonment.

The tragedy of our current moment is the collective choice to prioritize the abstraction of AI progress over the stark reality of human suffering. However, the profound opportunity lies in recognizing that psychology works both ways. The very same mechanisms that currently trap us in cycles of collective abandonment can, when strategically restructured and consciously leveraged, guide us toward choices that honor our shared humanity and safeguard planetary health. The ultimate answer to this global dilemma does not reside in more complex algorithms, but in our renewed recognition of our shared human connection, and the unwavering commitment to act accordingly.